Embodied Interaction

Aalto University, Lecturer: Matti Niinimäki

Hand Gesture Assignment

We start by reviewing some famous hand gestures. As Italians are renowned for using their hand often even in daily conversations we got interested in gestures like pinched fingers, but with some changes. We realized when we open the pinched finger pos it conveys a sense of growth, bloom, and prosperity.

This could fit properly for a relaxing VR gardening experience. By using hand detection players can not only put flowers in the scene but also feel their bloom and have a deeper gardening experience.Here are two example actions using mentioned gesture.

Here you can see a demo of how it can be used in a 2D space, we also implemented a simple interface for users to select, scale, and create flowers.

Final Project Idea

Spectrum Mirror

Whenever I listen to any music, I feel some energy around me, they are bouncing around based on the tones and change my mood. Obviously, they are not visible! But what if we show them using new technologies to have an immersive musical experience?

From Keijiro Takahashi

The idea is simple, it's been a long time since we visualize music and sounds using their spectrum. Here I'll bind those data with different VFX properties to achieve the mentioned effect. For the first demo, players will stand in front of a screen and see themselves as a visual effect, they can play any song from Spotify and listen to it and also observe their VFX replica on the screen.

For the first demo, I'll put most of my time into creating appealing visuals and also bind music frequency with them in a valid manner. but this Idea can go further, both in terms of visual and audio analysis.

Tools

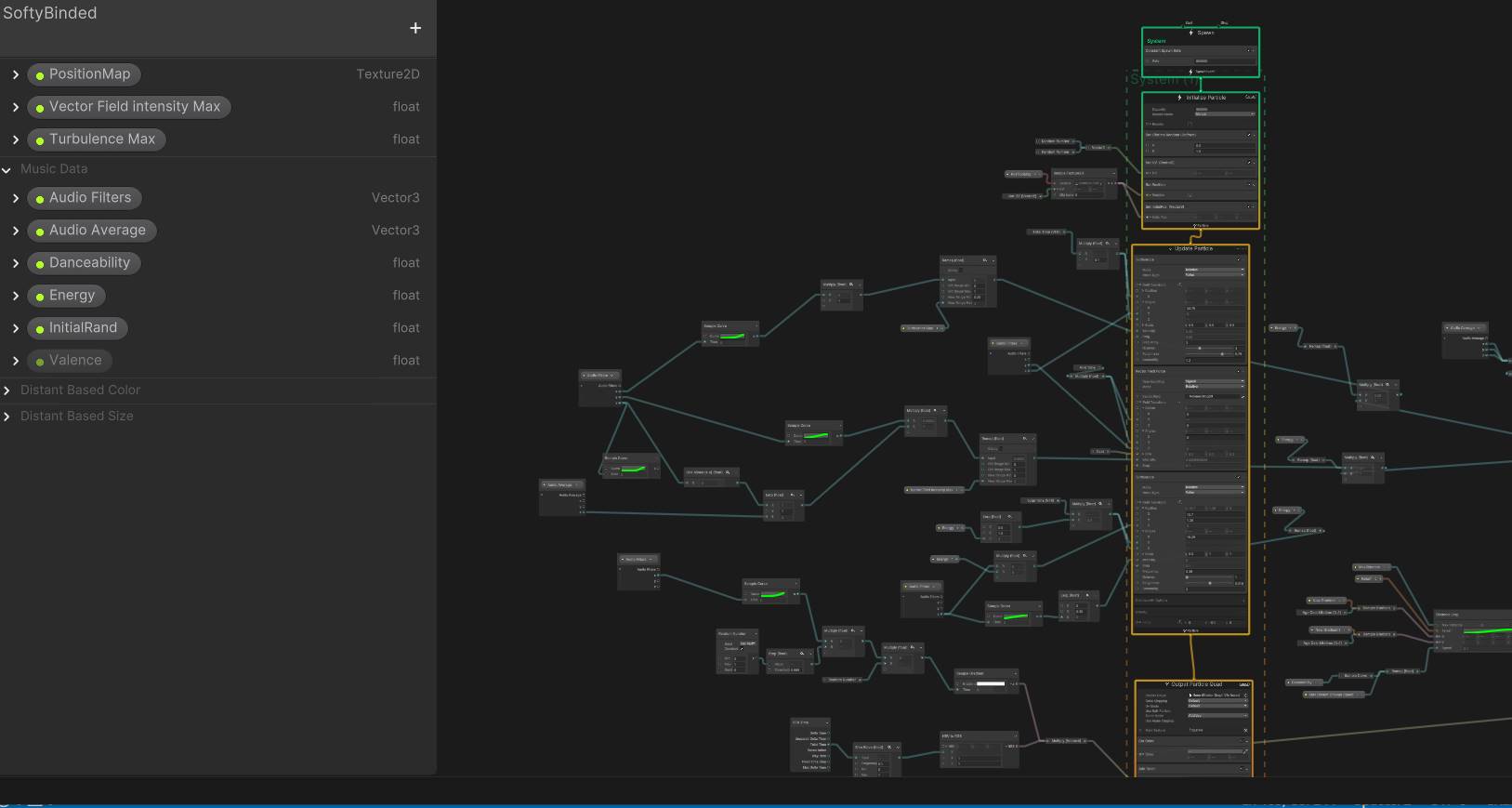

The visual effects are made in the Unity game engine using its GPU-based particle system(VFX graph)

Also, for using Azure Kinect data in Unity I used a free Unity package from

here.

Music Features

My goal is that users can play any music they want from Spotify and VFX will affect based on that real-time music.

For getting Audio spectrum data I use the

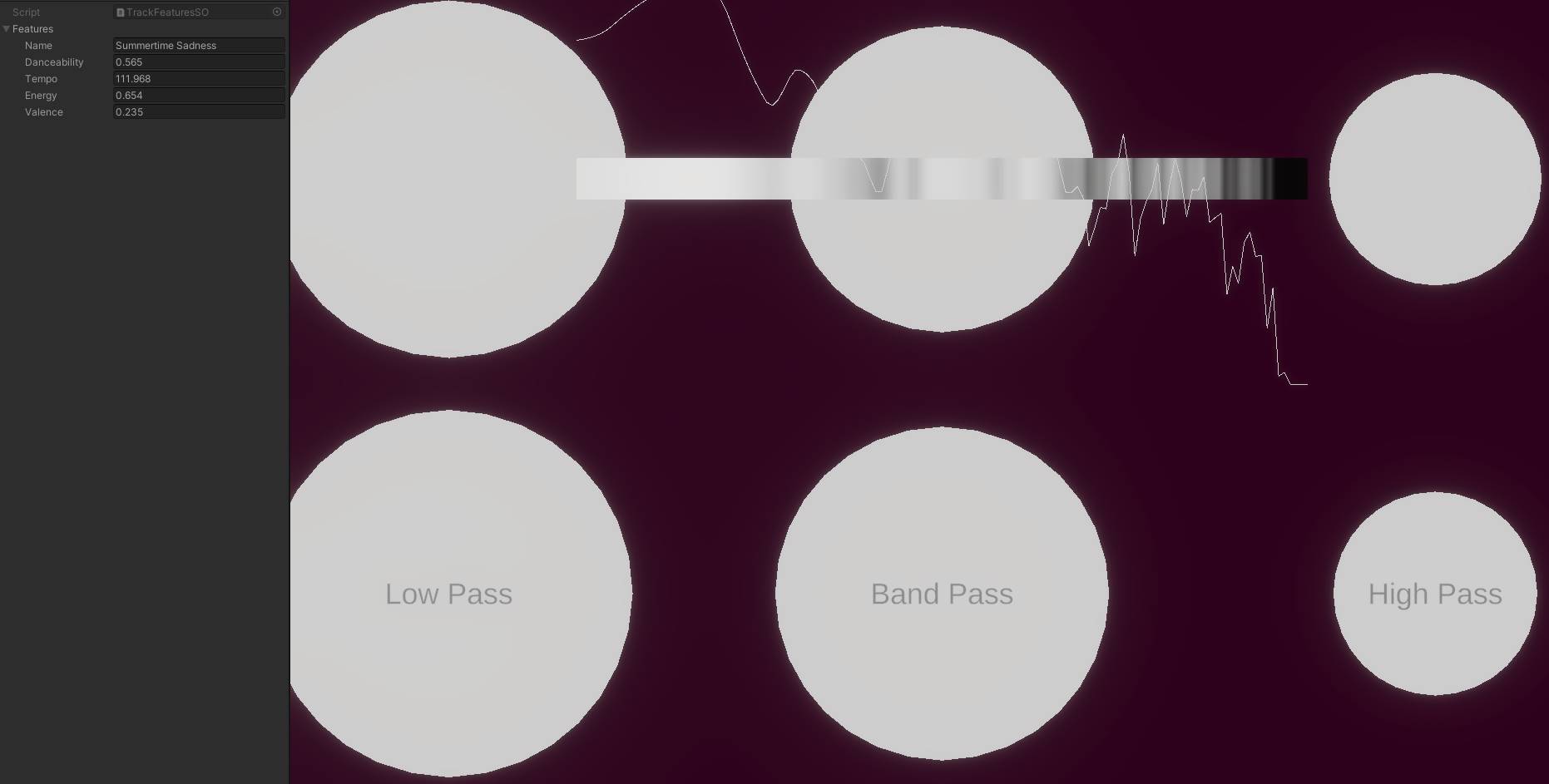

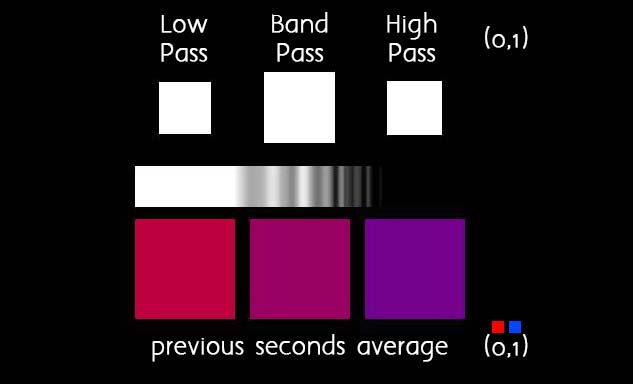

Keijiro LASP repository which provide the FFT (Fast Fourier Transform). I have access to Low Pass, Band Pass, High Pass, and spectrum data. In Addition, I use some custom scripts to smooth those values based on my needs.

Also, I managed to use

Spotify4Unity which uses

SpotifyAPI-NET to get some

attributes of the playing music on the fly. Now, whenever a new track plays I have access to its Tempo, Energy, Valence, and Danceability (Rang 0 to 1).

For the course demo, I mainly Used low, band, and high pass data of the spectrum including their average in the past few seconds. also, passes are getting proceeded in a custom script to get smoother and calculate their recent average.

For the VFX part, I use those three different passes as the input of three different noises and use the song energy values as the Drag parameters on them. The effect has two pre-defined colors which are set based on camera distance and for each song, it changes its Hue value randomly. Also, colors move based on the Danceability property of the playing music.

Final ShowCase

My main goal for this project was to connect technology to art in a novel way and also learn new tools and techniques. It has been a while since I wanted to contribute to some sort of digital art project and this course was a great start. During the class, I got familiar with Touch Designer and started to learn VFX Graph, which I had been looking to learn for a while. Also grasping different sensors and overall possibilities in this area was very informative.

The current version of the project works as a digital mirror where users stand in front of it and observe the visuals based on the desired music. Also as I only use depth detection there is no limit on the number of users and it detects the whole body containing users' cloth, hair, bag,... but in an abstract way. Fortunately, most of the users were happy with the audio visualization side of the project, currently, it works well with kinds of music that have many ups and down but in general works enough. In addition in some play testing, users were so involved playing with visuals and moving around that audio responsibility was not their first concern.

I also had an idea of combining body(hand) detection into this system and showing players another level of immersion by detecting hand collision with particles or playing some specific momentary effects based on hands(clapping,...). Unfortunately, I faced a huge library conflict with my current depth package and couldn't resolve it on time, but I think it's something doable in the future.

This idea can be used and expanded in different directions. From having this mirror concept in digital art museums to implementing it in some dance and live shows with even more number of camera and visuals. For now, I'll start to search and apply for some helps and grant for extending this project, I believe this mirror idea fits great with the depth detection system and I would love to work with some technical composers and maybe art directors for the final polish.

From Keijiro Takahashi

From Keijiro Takahashi